LLM Deep Dive Series (1 of 7): The Reality Behind Large Language Models (LLMs): A Tamed Monster?

🚨 LLM Deep Dive Series (1 of 7): The Reality Behind Large Language Models (LLMs): A Tamed Monster? 🧠🤖

This is the first in a 7-part series on Large Language Models (LLMs). Over the next few posts, I’ll blend theoretical insights with practical advice to help you understand how these models work at their core and how you can leverage them in business and technology.

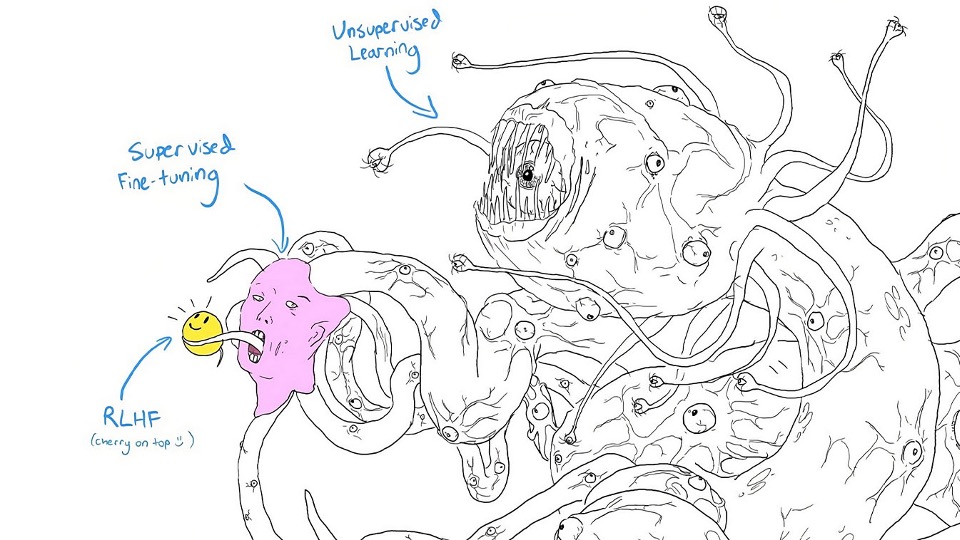

If you’ve worked with LLMs, you already know that there’s more happening beneath the surface than meets the eye. I find the image above to be a perfect representation of this—it vividly illustrates how we interact with LLMs today. It’s both fascinating and unsettling at the same time.

The Monster Behind the Curtain

The enormous structure in the background—the “monster”—symbolizes the complexity of unsupervised learning. Underneath all the fine-tuning and human-friendly responses lies a vast network of neural layers, billions of parameters, and enormous datasets. This is the true core of LLMs, and even those of us deeply involved in AI development don’t fully understand all its emergent behaviors.

The process starts with unsupervised learning, where the model trains on massive amounts of raw text data without explicit guidance. This stage creates the vast, complex, and somewhat alien intelligence that powers LLMs.

Fine-Tuning the Beast

💡 Supervised Fine-Tuning can be seen as a mask placed over the monster, giving it a more “human” appearance. It doesn’t fundamentally change the underlying system but makes its outputs more relevant to the specific tasks we care about.

🌟 RLHF (Reinforcement Learning from Human Feedback) is like the final touch—a “cherry on top.” RLHF guides the model toward responses that humans find helpful or valuable. However, it doesn’t truly tame the beast. Instead, it gently nudges the model toward behaving more predictably.

The Hidden Complexity

So, what does all of this mean?

🔍 Hidden Complexity: While we often interact with LLMs and get impressively coherent, human-like responses, there’s a massive, largely opaque structure behind these outputs. This complexity comes from the unsupervised learning on vast datasets, which leads to emergent behaviors that are often surprising—even to experts.

🤖 Human-Like Layering: The fine-tuning and RLHF are designed to put a human-like layer on top of this raw intelligence, making the model’s responses feel more relatable and natural. But beneath the surface, LLMs are still driven by raw statistical patterns, correlations, and probabilities.

Early Models: The GPT-3.5 Experience

For those of us who worked with earlier models like GPT-3.5, the experience was different. The upper layers were less refined, and while the models could surprise you with raw, unexpected insights, they would also make very silly mistakes. These earlier versions felt less “polished” but offered more access to the underlying complexity.

Newer Models: The GPT-4 Trade-Off

The newer models, such as GPT-4, have gained more “human-like common sense” and deliver more consistent outputs. However, this comes with a trade-off. As these models become more reliable, some of the raw, untamed insights from earlier iterations get lost. It’s akin to the joke about “building something mediocre but perfectly consistent” instead of aiming for perfection.

The Bigger Question

⚠️ The Bigger Question: Are we truly in control of this complexity? Or are we just covering it up with enough layers to make it seem like something we understand? The image above reminds us that, while LLMs are advancing rapidly, the depth of what’s happening inside these models remains elusive, even to the most knowledgeable minds.

What’s Next?

This post marks the beginning of a deep dive into LLMs. In the coming posts, I will cover a variety of topics to help you build a deeper intuition around these models, including:

- How Unsupervised Learning Works: Breaking down the heart of LLMs.

- The Role of Fine-Tuning: How human-guided layers influence output.

- Emergent Behavior: The fascinating and unexpected capabilities that arise from large datasets.

- Building LLM-Driven Applications: Best practices and frameworks for success.

- AI Ethics and Safety: The critical challenges as AI becomes more integrated.

- Harnessing LLMs for Business Impact: Practical insights for leveraging AI in real-world scenarios.

Stay tuned as we explore these topics in both a theoretical and hands-on practical approach.

P.S. Don’t forget to check out my book, Grow Your Business with AI, for a deeper dive into leveraging AI for business success! 📚 Check it out here.